Harry Potter NLP 2

Michael Siebel

April 2020

Sentiment Analysis for:

Harry Potter and the Philosopher’s Stone (1997)

Harry Potter and the Chamber of Secrets (1998)

Harry Potter and the Prisoner of Azkaban (1999)

Harry Potter and the Goblet of Fire (2000)

Harry Potter and the Order of the Phoenix (2003)

Harry Potter and the Half-Blood Prince (2005)

Harry Potter and the Deathly Hallows (2007)

Bottom Line Up Front

Below is a sentiment analysis of the Harry Potter Series. It seeks to find answers to the questions:

- Which of the heroes and villians have the most positive/negative sentiment?

- How does the sentiment of these heroes and villians change across each book?

- Which Hogwarts House contains the most positive sentiment?

- How does sentiment usually change over the course of one school year?

It finds that:

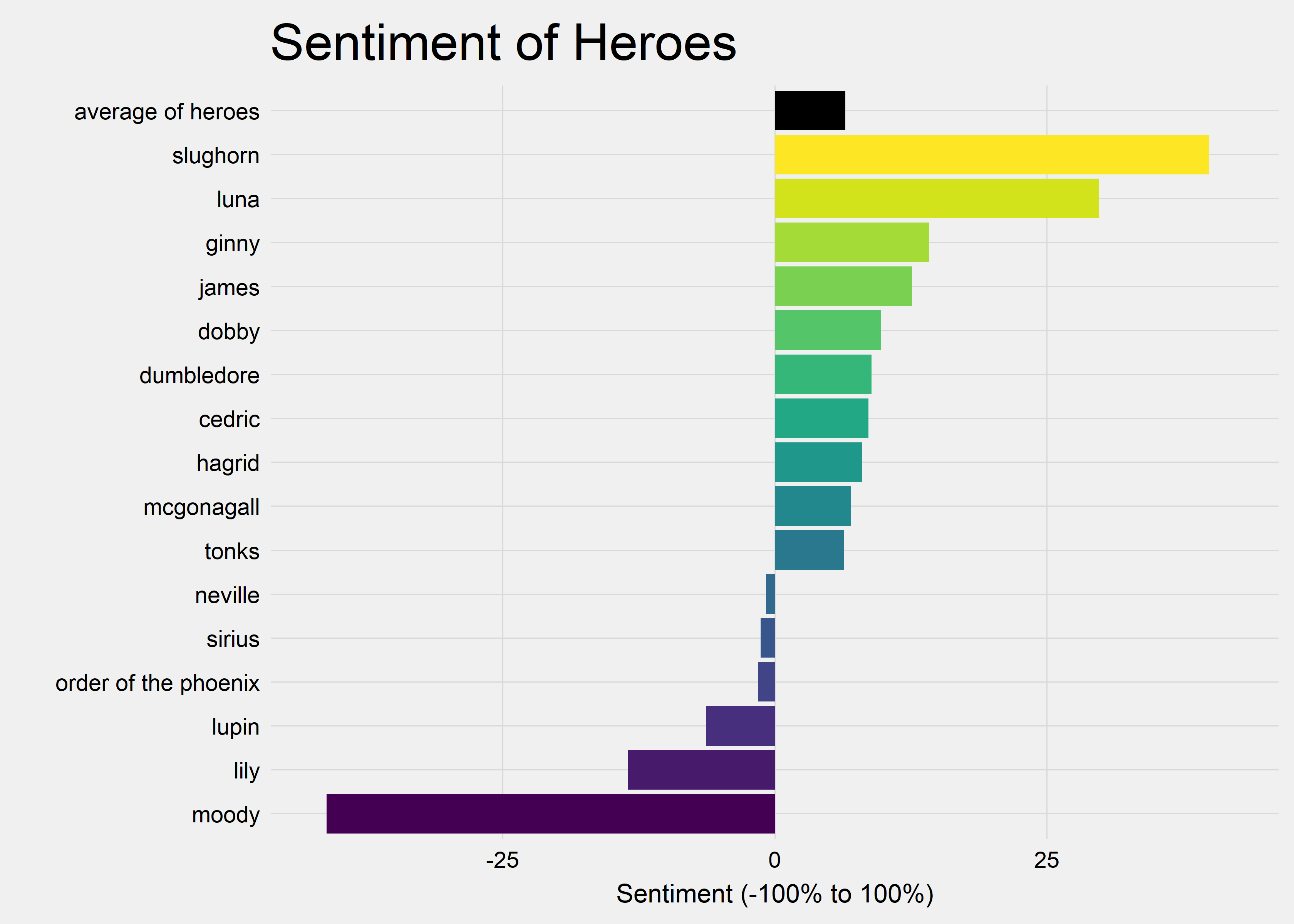

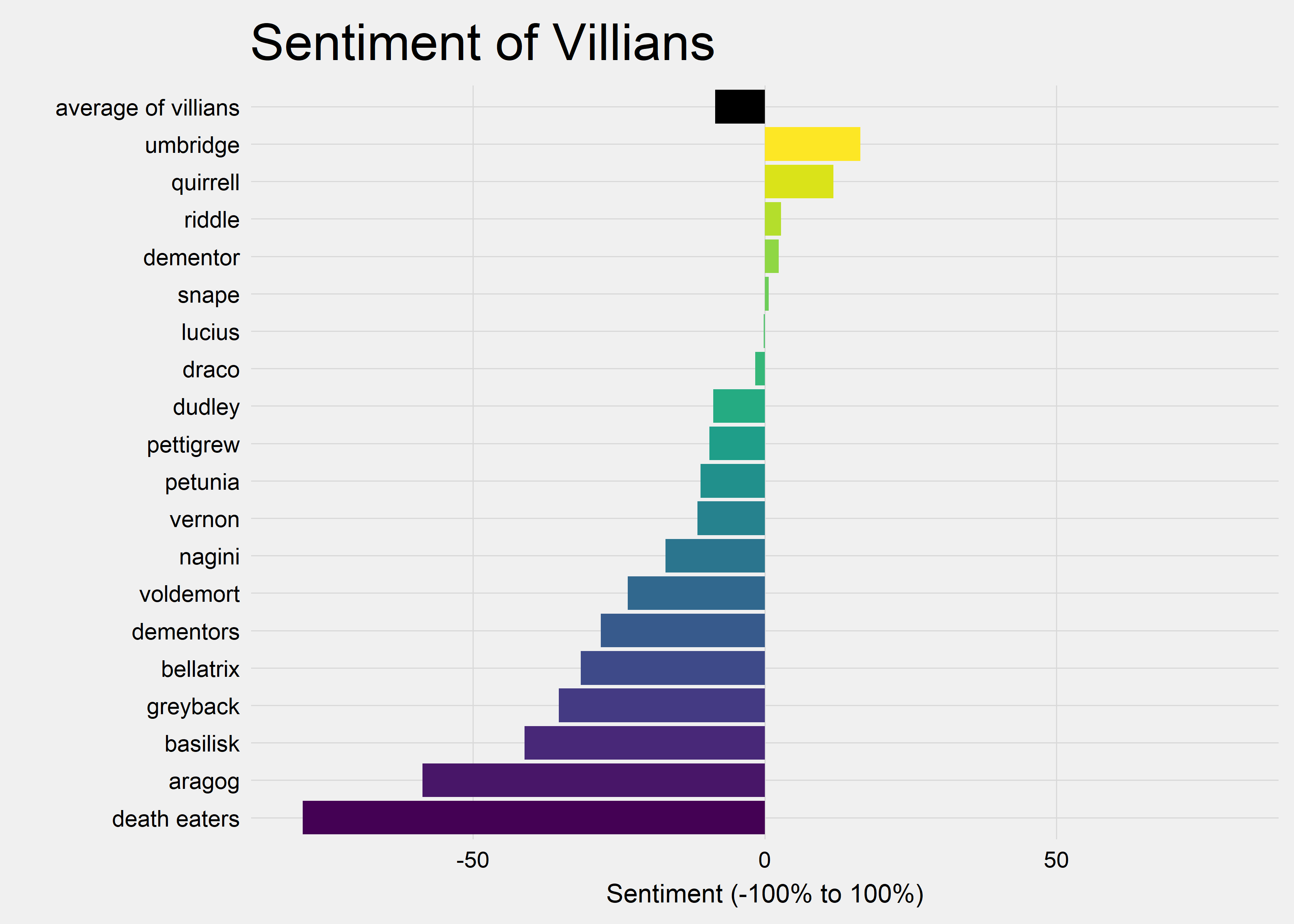

Heroes and Villians Rankings

Among the heroes, surprisingly, Professor Slughorn had the most positive text around him with Luna Lovegood the second. Death Eaters contained the most negative sentiment. Unsurprisingly, the werewolf (Greyback), ancient snake (Basilisk), and giant spider (Aragog) all had extremely negative sentiment.

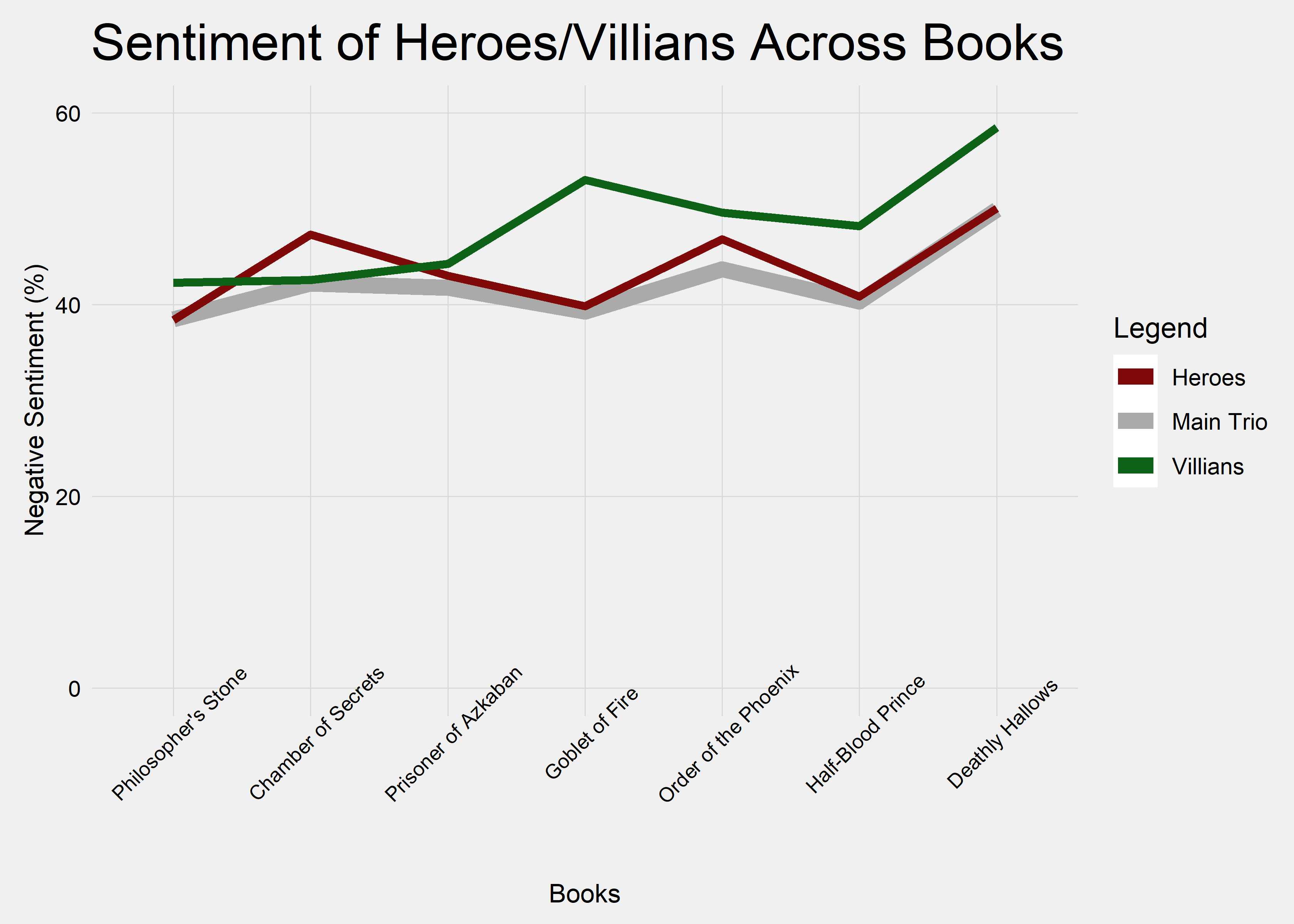

Heroes and Villians Across the Books

Negative sentiment in the text around the villians grew substantially as the story progress, while the sentiment in the text around the heroes stayed relatively stable. The Deathly Hallows was the book featuring the most negative sentiment.

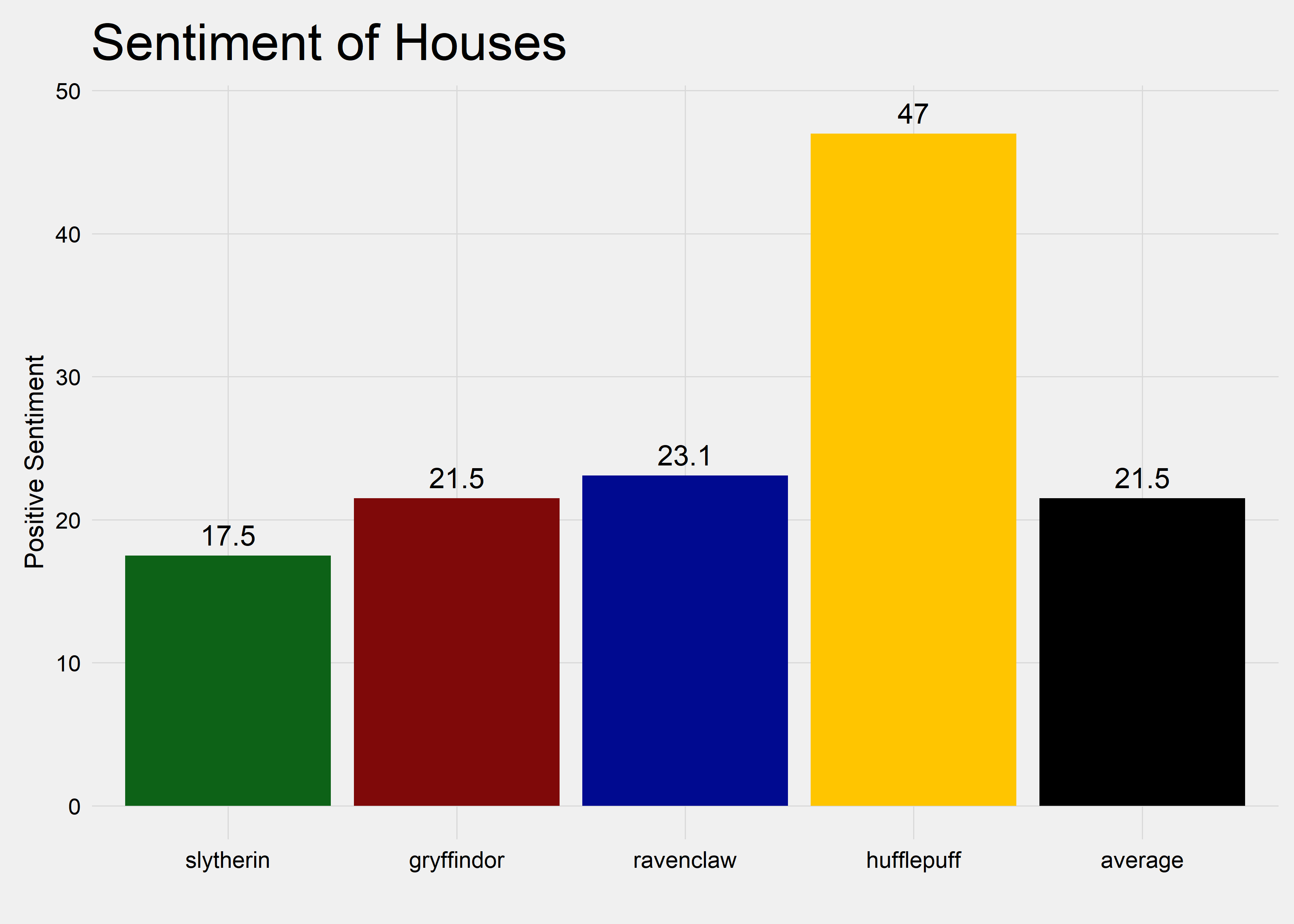

Treatment of the Hogwarts Houses

As it should be, Hufflepuff was the house that has the most positive sentiment in the text around it.

Sentiment Across the Pages

The end of each book was negative until the final chapter, which always seems to end on a positive note - whether the heroes were victories or Harry’s friends were providing him some support over a more difficult ending.

Setup

rm(list = ls())

gc()

# install.packages('pacman') install.packages('remotes') library(remotes)

# install.packages('devtools') library(devtools)

# remotes::install_github('nrguimaraes/sentimentSetsR') library(magrittr)

# devtools::install_github('wch/webshot') webshot::install_phantomjs()

# library(BiocManager)

# BiocManager::install('https://bioconductor.org/biocLite.R')

# source('https://bioconductor.org/biocLite.R') biocLite('EBImage')

library(pacman)

pacman::p_load(devtools, knitr, magrittr, dplyr, ggplot2, rvest, sentimentSetsR,

caret, textTinyR, text2vec, tm, tidytext, stringr, stringi, SnowballC, stopwords,

wordcloud, prettydoc, cowplot, kable, utf8, corpus, glue, topicmodels, stm, wordcloud2,

htmlwidgets, viridis)

knitr::opts_chunk$set(echo = TRUE, message = FALSE, comment = NA, warning = FALSE,

tidy = TRUE, results = "hold", cache = FALSE, dpi = 240, out.width = "75%")

# Custom functions Remove quotation marks

pasteNQ <- function(...) {

output <- paste(...)

noquote(output)

}

pasteNQ0 <- function(...) {

output <- paste0(...)

noquote(output)

}

# Percentages

propTab <- function(data, exclude = NULL, useNA = "no", dnn = NULL, deparse.level = 1,

digits = 0) {

round(table(data, exclude = exclude, useNA = useNA, dnn = dnn, deparse.level = deparse.level)/NROW(data) *

100, digits)

}

## Chart Template

Grph_theme <- function() {

palette <- brewer.pal("Greys", n = 9)

color.background = palette[2]

color.grid.major = palette[3]

color.axis.text = palette[6]

color.axis.title = palette[7]

color.title = palette[9]

theme_bw(base_size = 9) + theme(panel.background = element_rect(fill = color.background,

color = color.background)) + theme(plot.background = element_rect(fill = color.background,

color = color.background)) + theme(panel.border = element_rect(color = color.background)) +

theme(panel.grid.major = element_line(color = color.grid.major, size = 0.25)) +

theme(panel.grid.minor = element_blank()) + theme(axis.ticks = element_blank()) +

theme(legend.position = "none") + theme(legend.title = element_text(size = 11,

color = "black")) + theme(legend.background = element_rect(fill = color.background)) +

theme(legend.text = element_text(size = 9, color = "black")) + theme(strip.text.x = element_text(size = 9,

color = "black", vjust = 1)) + theme(plot.title = element_text(color = color.title,

size = 20, vjust = 1.25)) + theme(axis.text.x = element_text(size = 9, color = "black")) +

theme(axis.text.y = element_text(size = 9, color = "black")) + theme(axis.title.x = element_text(size = 10,

color = "black", vjust = 0)) + theme(axis.title.y = element_text(size = 10,

color = "black", vjust = 1.25)) + theme(plot.margin = unit(c(0.35, 0.2, 0.3,

0.35), "cm"))

}

## Clean Corpus

basicclean <- function(rawtext) {

# Set to lowercase

rawtext <- tolower(rawtext)

# Fix apostorphe

rawtext <- gsub("’", "'", rawtext)

# Remove contractions

fix_contractions <- function(rawtext) {

rawtext <- gsub("will not", "won't", rawtext)

rawtext <- gsub("can't", "cannot", rawtext)

rawtext <- gsub("can not", "cannot", rawtext)

rawtext <- gsub("shall not", "shant", rawtext)

rawtext <- gsub("n't", " not", rawtext)

rawtext <- gsub("'ll", " will", rawtext)

rawtext <- gsub("'re", " are", rawtext)

rawtext <- gsub("'ve", " have", rawtext)

rawtext <- gsub("'m", " am", rawtext)

rawtext <- gsub("'d", " would", rawtext)

rawtext <- gsub("'ld", " would", rawtext)

rawtext <- gsub("'ld", " would", rawtext)

rawtext <- gsub("'s", "", rawtext)

return(rawtext)

}

rawtext <- fix_contractions(rawtext)

# Strip whitespace

rawtext <- stripWhitespace(rawtext)

return(rawtext)

}

# Remove stop words

removestopwords <- function(rawtext, remove = NULL, retain = NULL) {

# Remove stop words

stopwords_custom <- stopwords::stopwords("en", source = "snowball")

stopwords_custom <- c(stopwords_custom, remove)

stopwords_retain <- retain

stopwords_custom <- stopwords_custom[!stopwords_custom %in% stopwords_retain]

rawtext <- removeWords(rawtext, stopwords_custom)

return(rawtext)

}

## Word Stemming

wordstem <- function(rawtext) {

# Stemming words

rawtext <- stemDocument(rawtext)

return(rawtext)

}

## Remove Non-Alpha

removenonalpha <- function(rawtext) {

# Remove puncutation, numbers, and other none characters

rawtext <- removePunctuation(rawtext)

rawtext <- removeNumbers(rawtext)

rawtext <- gsub("[^[:alnum:]///' ]", "", rawtext)

rawtext <- gsub("[']", "", rawtext)

return(rawtext)

}

# Remove JavaScript from WordClouds

library("EBImage")

embed_htmlwidget <- function(widget, rasterise = T) {

outputFormat = knitr::opts_knit$get("rmarkdown.pandoc.to")

if (rasterise || outputFormat == "latex") {

html.file = tempfile("tp", fileext = ".html")

png.file = tempfile("tp", fileext = ".png")

htmlwidgets::saveWidget(widget, html.file, selfcontained = FALSE)

webshot::webshot(html.file, file = png.file, vwidth = 700, vheight = 500,

delay = 10)

img = EBImage::readImage(png.file)

EBImage::display(img)

} else {

widget

}

}Sentiment analysis is a subfield of natural language processing (NLP), which uses sentiment scores of individual words to describe positive language and negative language in a text. For this analysis, I parsed the text of each of the seven books into paragraph triplets, roughly averaging around 80-90 words. I then scored the words within those paragraph triplets to define the sentiment of these “text snapshots”.

Next, I organized the text by characters (heroes and villians) and then Hogwarts Houses to understand which had the most positive or negative sentiment in the 80-90 or so words around their mention. Finally, a looked at sentiment per page to see the “rollercoaster of emotions” that occurs during each Hogwarts school years.

Load Data

setwd("C:/Users/siebe/Documents/07_Books/Harry Potter/")

titles <- c("Philosopher's Stone", "Chamber of Secrets", "Prisoner of Azkaban", "Goblet of Fire",

"Order of the Phoenix", "Half-Blood Prince", "Deathly Hallows")

html <- c("Harry_Potter_and_the_Philosophers_Stone.html", "Harry_Potter_and_the_Chamber_of_Secrets.html",

"Harry_Potter_and_the_Prisoner_of_Azkaban.html", "Harry_Potter_and_the_Goblet_of_Fire.html",

"Harry_Potter_and_the_Order_of_the_Phoenix.html", "Harry_Potter_and_the_Half-Blood_Prince.html",

"Harry_Potter_and_the_Deathly_Hallows.html")

books <- tibble(Text = as.character(), Book = as.character())

para3 <- tibble(Text = as.character(), Book = as.character())

for (i in 1:7) {

rawtext <- read_html(html[i]) %>% html_nodes(xpath = "/html/body/p") %>% html_text(trim = TRUE)

wordcount <- sapply(strsplit(rawtext, " "), length)

paragraph <- rawtext[wordcount >= 3]

# Book Level Documents

books <- rbind(books, tibble(Text = str_c(paragraph, collapse = " "), Book = titles[i]))

# Paragraph Level Documents

triplet <- do.call(rbind, lapply(seq(1, length(paragraph), by = 3), function(x) tibble(Text = str_c(paragraph[x:(x +

2)], collapse = " "), Book = titles[i])))

para3 <- rbind(para3, triplet)

}

# Page Level Documents

pages <- books %>% unnest_tokens(Text, Text, strip_punct = T, to_lower = F) %>% group_by(Book,

Page = dplyr::row_number()%/%250) %>% dplyr::summarize(Text = stringr::str_c(Text,

collapse = " ")) %>% mutate(Page = dplyr::row_number()) %>% ungroup()

# Place books in chronolgical order

books$Book <- factor(books$Book, levels = titles)

para3$Book <- factor(para3$Book, levels = titles)

pages$Book <- factor(pages$Book, levels = titles)Heroes and Villians

Which of the heroes and villians have the most positive/negative sentiment?

In this sentiment analysis, I was interested in seeing which characters have the most positive and, conversely, negative sentiment in the text around them. Using paragraph triplets (averaging around 80-90 words), I observed the count of positive and negative words around select characters.

I created three character dictionaries to compare against each other. The first contains our main trio, who are mentioned in most of the text. The second contains the heroes (and organizations) outside of the main trio. The third contains villians of the story arch. Note, without mentioning spoilers, at least one of the villians arguably turns out to be a good character - but in terms of text spends most the story on the dark side.

# Create Dictionaries

trio <- c("harry", "ron", "hermione")

pasteNQ("Main Characters:")

paste(trio)

cat("\n")

heroes <- c("lily", "james", "hagrid", "dumbledore", "sirius", "lupin", "moody",

"slughorn", "dobby", "cedric", "luna", "tonks", "mcgonagall", "ginny", "order of the phoenix",

"neville")

pasteNQ("Number of Heroes:", length(heroes))

pasteNQ("Including:")

paste(heroes)

cat("\n")

villians <- c("voldemort", "nagini", "snape", "draco", "lucius", "umbridge", "pettigrew",

"dementor", "dementors", "greyback", "bellatrix", "quirrell", "riddle", "death eaters",

"aragog", "basilisk", "dudley", "vernon", "petunia")

pasteNQ("Number of Villians:", length(villians))

pasteNQ("Including:")

paste(villians)[1] Main Characters:

[1] "harry" "ron" "hermione"

[1] Number of Heroes: 16

[1] Including:

[1] "lily" "james" "hagrid"

[4] "dumbledore" "sirius" "lupin"

[7] "moody" "slughorn" "dobby"

[10] "cedric" "luna" "tonks"

[13] "mcgonagall" "ginny" "order of the phoenix"

[16] "neville"

[1] Number of Villians: 19

[1] Including:

[1] "voldemort" "nagini" "snape" "draco" "lucius"

[6] "umbridge" "pettigrew" "dementor" "dementors" "greyback"

[11] "bellatrix" "quirrell" "riddle" "death eaters" "aragog"

[16] "basilisk" "dudley" "vernon" "petunia" Sentiment Levels

# Sentiment Scores

average <- function(x) {

pos <- sum(x[x > 0], na.rm = T)

neg <- sum(x[x < 0], na.rm = T) %>% abs()

neu <- length(x[x == 0])

bal <- ((pos - neg)/(pos + neg)) * 100

y <- ifelse(is.nan(bal), 0, bal %>% as.numeric())

return(y)

}

CleanText <- basicclean(para3$Text)

para3$Score <- sapply(CleanText, function(x) getSentiment(x, dictionary = "vader",

score.type = average))

para3$Cross <- NA

para3$Cross <- ifelse(para3$Score < 0, "Negative", para3$Cross)

para3$Cross <- ifelse(para3$Score > 0, "Positive", para3$Cross)

para3$Cross <- ifelse(para3$Score == 0, "Neutral", para3$Cross)

pasteNQ("Sentiment in Paragraphs (%)")

propTab(para3$Cross)[1] Sentiment in Paragraphs (%)

Negative Neutral Positive <NA>

43 10 47 0 Sentiment of Heroes

Surprisingly, Professor Slughorn has the most positive text around him. This perhaps was because Harry for the first time enjoyed and excelled in potions with him as teacher. Additionally, Harry spent a non-trival amount of time under the Felix Felicis potion while with Professor Slughorn.

Fan favorite, Luna Lovegood, has the second most positive text around her. This was not surprising as she has a quirky, upbeat personality.

Professor Moody had the most negative sentiment. This can be both due to his usual sour personality and that the majority of the time we were reading about him, it was villian Barty Crouch, Jr. in disguise.

Harry’s mother, Lily, unsurprisingly has the second most negative sentiment as she is mostly mentioned when Harry is thinking back about her death.

# Subset text mentioning a hero

heroes_sent <- data.frame(Hero = NA, Sentiment = NA)

# Find mean of sentiment scores for each hero and create ID of all heroes for

# total row

heroes_ID <- c()

for (string in heroes) {

findstr <- paste0("\\b", string, "\\b")

heroes_sent <- rbind(heroes_sent, c(string, para3 %>% as.data.frame() %>% .[grepl(findstr,

CleanText), "Score"] %>% average() %>% as.numeric()))

heroes_ID <- c(heroes_ID, grep(findstr, CleanText))

}

# Sort data

heroes_sent <- heroes_sent %>% .[order(heroes_sent$Sentiment %>% as.numeric()), ] %>%

tidyr::drop_na()

max_sent <- max(heroes_sent$Sentiment %>% as.numeric() %>% abs() + 1)

# Add hero total

heroes_ID <- unique(heroes_ID)

heroes_sent <- rbind(heroes_sent, c("average of heroes", para3 %>% as.data.frame() %>%

.[heroes_ID, "Score"] %>% average() %>% as.numeric()))

# Graph

heroes_sent %>% mutate(Hero = factor(Hero, levels = Hero)) %>% ggplot(aes(x = Hero,

y = Sentiment %>% as.numeric() %>% round(1), fill = Hero)) + geom_col() + coord_flip() +

Grph_theme() + ylab("Sentiment (-100% to 100%)") + xlab("") + ggtitle("Sentiment of Heroes") +

ylim(max_sent * -1, max_sent) + scale_fill_manual(values = c(viridis(nrow(heroes_sent) -

1), "black")) + guides(fill = FALSE)

Sentiment of Villians

It makes sense that Tom Riddle, Professor Quirrell, and perhaps even Professor Snape contain more positive text than negative text.

Professor Umbridge, surprisingly, has the most positive sentiment. As a character, she projects a “everything is fine and I’m here to help” while she seeks to torture the main trio.

I intentionally defined “demonator” and “demonators”, separately. In the plural, the contain high sentiment. However, the most interesting takeaway is that, in the singular, a demonator has more positive than negative text around it This actually makes sense as Harry must think of positive thoughts/memories to combant the villians that are the personification of depression.

Unsurprisingly, the werewolf, ancient snake, and giant spider all have extremely negative sentiment.

# Subset text mentioning a villian

villians_sent <- data.frame(Villian = NA, Sentiment = NA)

# Find mean of sentiment scores for each villian and create ID of all villians

# for total row

villians_ID <- c()

for (string in villians) {

findstr <- paste0("\\b", string, "\\b")

villians_sent <- rbind(villians_sent, c(string, para3 %>% as.data.frame() %>%

.[grepl(findstr, CleanText), "Score"] %>% average() %>% as.numeric()))

villians_ID <- c(villians_ID, grep(findstr, CleanText))

}

# Sort data

villians_sent <- villians_sent %>% .[order(villians_sent$Sentiment %>% as.numeric()),

] %>% tidyr::drop_na()

max_sent <- max(villians_sent$Sentiment %>% as.numeric() %>% abs() + 1)

# Add hero total

villians_ID <- unique(villians_ID)

villians_sent <- rbind(villians_sent, c("average of villians", para3 %>% as.data.frame() %>%

.[villians_ID, "Score"] %>% average() %>% as.numeric()))

# Graph

villians_sent %>% mutate(Villian = factor(Villian, levels = Villian)) %>% ggplot(aes(x = Villian,

y = Sentiment %>% as.numeric() %>% round(1), fill = Villian)) + geom_col() +

coord_flip() + Grph_theme() + ylab("Sentiment (-100% to 100%)") + xlab("") +

ggtitle("Sentiment of Villians") + ylim(max_sent * -1, max_sent) + scale_fill_manual(values = c(viridis(nrow(villians_sent) -

1), "black")) + guides(fill = FALSE)

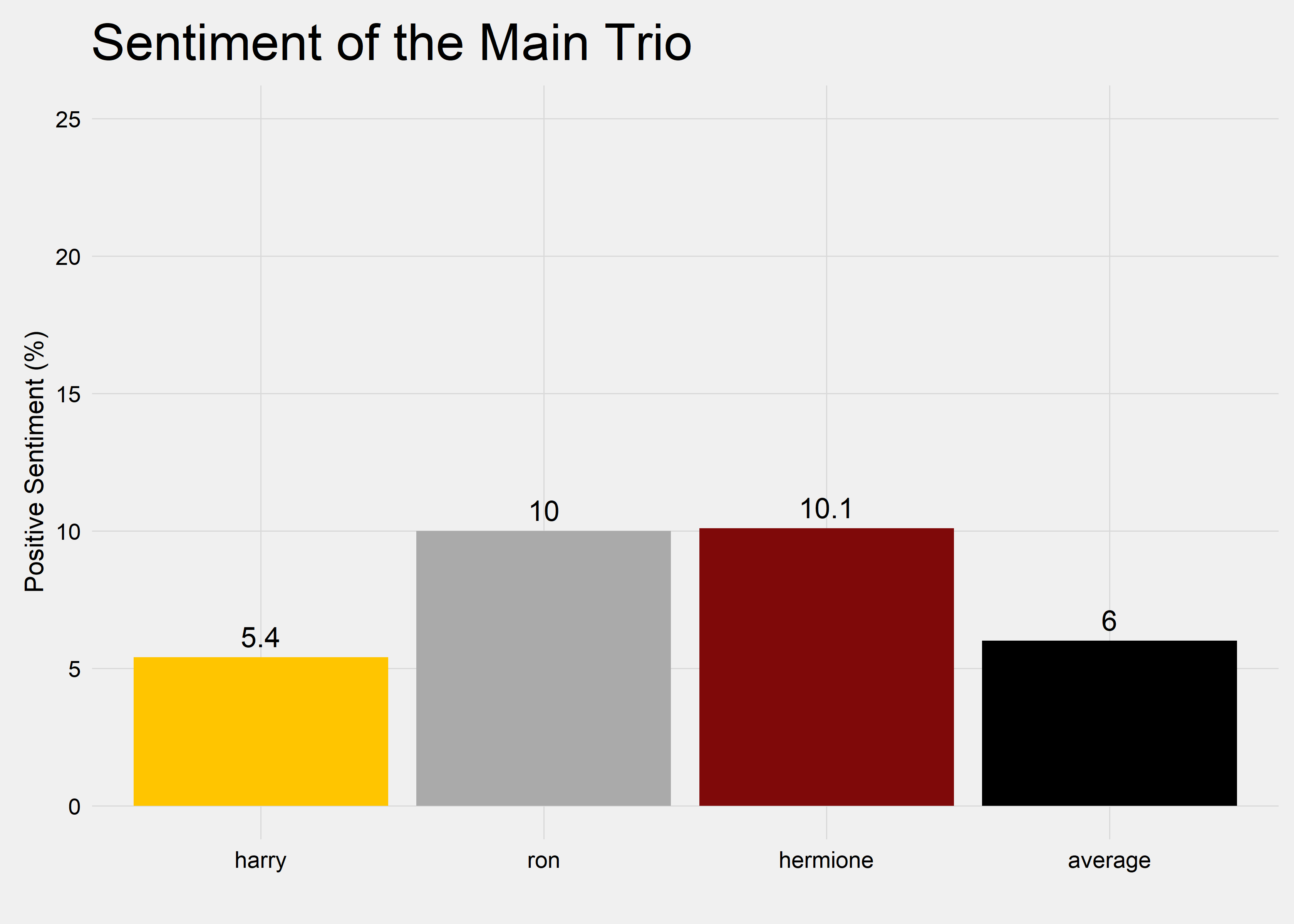

Sentiment of Main Trio

Harry has less positive sentiment in the text around him then Ron and Hermione. This probably has a lot to do with his angsty stint in Order of the Phoenix (Book 5), where I think the caps lock on J.K. Rowling’s keyboard may have broke under heavy-use.

# Subset text mentioning a main character

trio_sent <- data.frame(Trio = NA, Sentiment = NA)

# Find mean of sentiment scores for each main character and create ID of all trio

# for total row

trio_ID <- c()

for (string in trio) {

findstr <- paste0("\\b", string, "\\b")

trio_sent <- rbind(trio_sent, c(string, para3 %>% as.data.frame() %>% .[grepl(findstr,

CleanText), "Score"] %>% average() %>% as.numeric()))

trio_ID <- c(trio_ID, grep(findstr, CleanText))

}

# Sort data

trio_sent <- trio_sent %>% .[order(trio_sent$Sentiment %>% as.numeric()), ] %>% tidyr::drop_na()

# Add main character total

trio_ID <- unique(trio_ID)

trio_sent <- rbind(trio_sent, c("average", para3 %>% as.data.frame() %>% .[trio_ID,

"Score"] %>% average() %>% as.numeric()))

# Graph

trio_sent %>% mutate(Trio = factor(Trio, levels = Trio)) %>% ggplot(aes(x = Trio,

y = Sentiment %>% as.numeric() %>% round(1), fill = Trio, label = Sentiment)) +

geom_col() + geom_text(aes(label = Sentiment %>% as.numeric() %>% round(1)),

vjust = -0.5) + Grph_theme() + ylab("Positive Sentiment (%)") + xlab("") + ggtitle("Sentiment of the Main Trio") +

ylim(0, 25) + scale_fill_manual(values = c("#FFC500", "#AAAAAA", "#7F0909", "black")) +

guides(fill = FALSE)

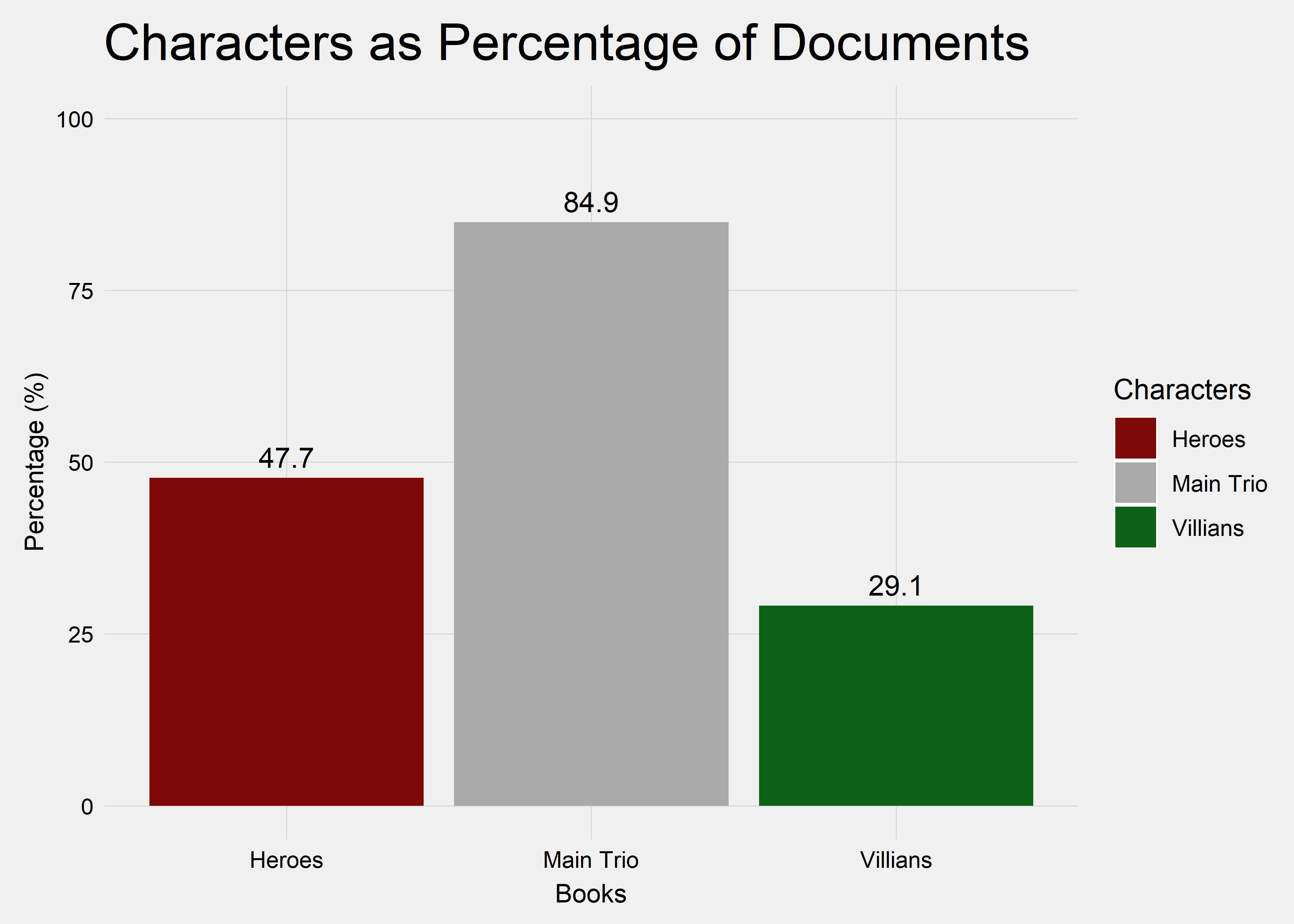

Percentage of Documents

Here, I wanted to note the prevalance of the main trio, the heroes, and the villians. The main trio were mentioned in ~85% of each paragraph triplet. The heroes outside the main trio were in less than 50% of each paragraph triplet, while villians are much more rare (less than 30%).

This demonstrates that J.K. Rowling prefers to focus on the likeable characters and keeps the overall story arch, featuring the villians, to a minimum.

docs_prop <- tibble(Percentage = c(round(length(trio_ID)/nrow(para3) * 100, 1), round(length(heroes_ID)/nrow(para3) *

100, 1), round(length(villians_ID)/nrow(para3) * 100, 1)), Characters = c("Main Trio",

"Heroes", "Villians"))

colors <- c(Heroes = "#7F0909", Villians = "#0D6217", `Main Trio` = "#AAAAAA")

ggplot(docs_prop, mapping = aes(x = Characters, y = Percentage, fill = Characters,

label = Percentage)) + geom_col() + geom_text(aes(label = Percentage), vjust = -0.5) +

ggtitle("Characters as Percentage of Documents") + Grph_theme() + scale_fill_manual(values = colors) +

theme(legend.position = "right") + ylim(0, 100) + labs(x = "Books", y = "Percentage (%)",

color = "Legend")

Characters Across Books

How does the sentiment of these heroes and villians change across each book?

In the chart below, I showed the average sentiment for the main trio, heroes, and villians as the series progresses. The main takeaways I saw are:

- Negative sentiment in the text around the villians grows substantially

- Negative sentiment was more stable for the main trio and the heroes

- Goblet of Fire (Book 4) had the largest disconnect between the text mentiong villians versus text mentioning the main trio or the heroes, where negative sentiment was high for the villians but was low relative to the other books for non-villians

- Chamber of Secrets (Book 2) was the only book where text mentioning the main trio or the heroes was more negative then text mentioning the villians - largely because Tom Riddle’s tale likely starts with positive sentiment

- Deathly Hallows (Book 7) was the book featuring the most negative sentiment

# Main Characters Sentiment

trio_grp <- para3[trio_ID, ] %>% mutate(Negative = recode(Cross, Positive = 0, Neutral = 0,

Negative = 1), Neutral = recode(Cross, Positive = 0, Neutral = 1, Negative = 0)) %>%

group_by(Book) %>% summarize(`Trio Sentiment` = mean(Negative, na.rm = T) * 100)

# Heroes Sentiment

heroes_grp <- para3[heroes_ID, ] %>% mutate(Negative = recode(Cross, Positive = 0,

Neutral = 0, Negative = 1), Neutral = recode(Cross, Positive = 0, Neutral = 1,

Negative = 0)) %>% group_by(Book) %>% summarize(`Heroes Sentiment` = mean(Negative,

na.rm = T) * 100)

# Villians Sentiment

villians_grp <- para3[villians_ID, ] %>% mutate(Negative = recode(Cross, Positive = 0,

Neutral = 0, Negative = 1), Neutral = recode(Cross, Positive = 0, Neutral = 1,

Negative = 0)) %>% group_by(Book) %>% summarize(`Villians Sentiment` = mean(Negative,

na.rm = T) * 100)

# Plot

df <- inner_join(heroes_grp, villians_grp, by = "Book") %>% as_tibble()

df <- inner_join(trio_grp, df, by = "Book")

colors <- c(Heroes = "#7F0909", Villians = "#0D6217", `Main Trio` = "#AAAAAA")

ggplot(df, mapping = aes(x = Book, group = NA)) + geom_line(aes(y = `Trio Sentiment`,

color = "Main Trio"), size = 3) + geom_line(aes(y = `Heroes Sentiment`, color = "Heroes"),

size = 1.5) + geom_line(aes(y = `Villians Sentiment`, color = "Villians"), size = 1.5) +

ggtitle("Sentiment of Heroes/Villians Across Books") + Grph_theme() + scale_color_manual(values = colors) +

theme(axis.text.x = element_text(size = 8, angle = 45)) + theme(legend.position = "right") +

ylim(0, 60) + labs(x = "Books", y = "Negative Sentiment (%)", color = "Legend")

Treatment of the Hogwarts Houses

Which Hogwarts House contains the most positive sentiment?

Ok, I needed to know the sentiment surrounding the four Howgarts Houses: Gryffindor, Slytherin, Ravenclaw and Hufflepuff.

# Create Dictionaries

houses <- c("gryffindor", "slytherin", "ravenclaw", "hufflepuff")

pasteNQ("Houses:")

paste(houses)[1] Houses:

[1] "gryffindor" "slytherin" "ravenclaw" "hufflepuff"Sentiment of Houses

…and the best house, Hufflepuff!, easily contained the most positive sentiment.

# Subset text mentioning a main character

houses_sent <- data.frame(Houses = NA, Sentiment = NA)

# Find mean of sentiment scores for each main character and create ID of all

# houses for total row

houses_ID <- c()

for (string in houses) {

findstr <- paste0("\\b", string, "\\b")

houses_sent <- rbind(houses_sent, c(string, para3 %>% as.data.frame() %>% .[grepl(findstr,

CleanText), "Score"] %>% average() %>% as.numeric()))

houses_ID <- c(houses_ID, grep(findstr, CleanText))

assign(paste0(string, "_ID"), grep(findstr, CleanText))

}

# Sort data

houses_sent <- houses_sent %>% .[order(houses_sent$Sentiment %>% as.numeric()), ] %>%

tidyr::drop_na()

max_sent <- max(houses_sent$Sentiment %>% as.numeric() %>% abs() + 1)

# Add main character total

houses_ID <- unique(houses_ID)

houses_sent <- rbind(houses_sent, c("average", para3 %>% as.data.frame() %>% .[houses_ID,

"Score"] %>% average() %>% as.numeric()))

# Graph

houses_sent %>% mutate(Houses = factor(Houses, levels = Houses)) %>% ggplot(aes(x = Houses,

y = Sentiment %>% as.numeric() %>% round(1), fill = Houses, label = Sentiment)) +

geom_col() + geom_text(aes(label = Sentiment %>% as.numeric() %>% round(1)),

vjust = -0.5) + Grph_theme() + ylab("Positive Sentiment") + xlab("") + ggtitle("Sentiment of Houses") +

ylim(0, max_sent) + scale_fill_manual(values = c("#0D6217", "#7F0909", "#000A90",

"#FFC500", "black")) + guides(fill = FALSE)

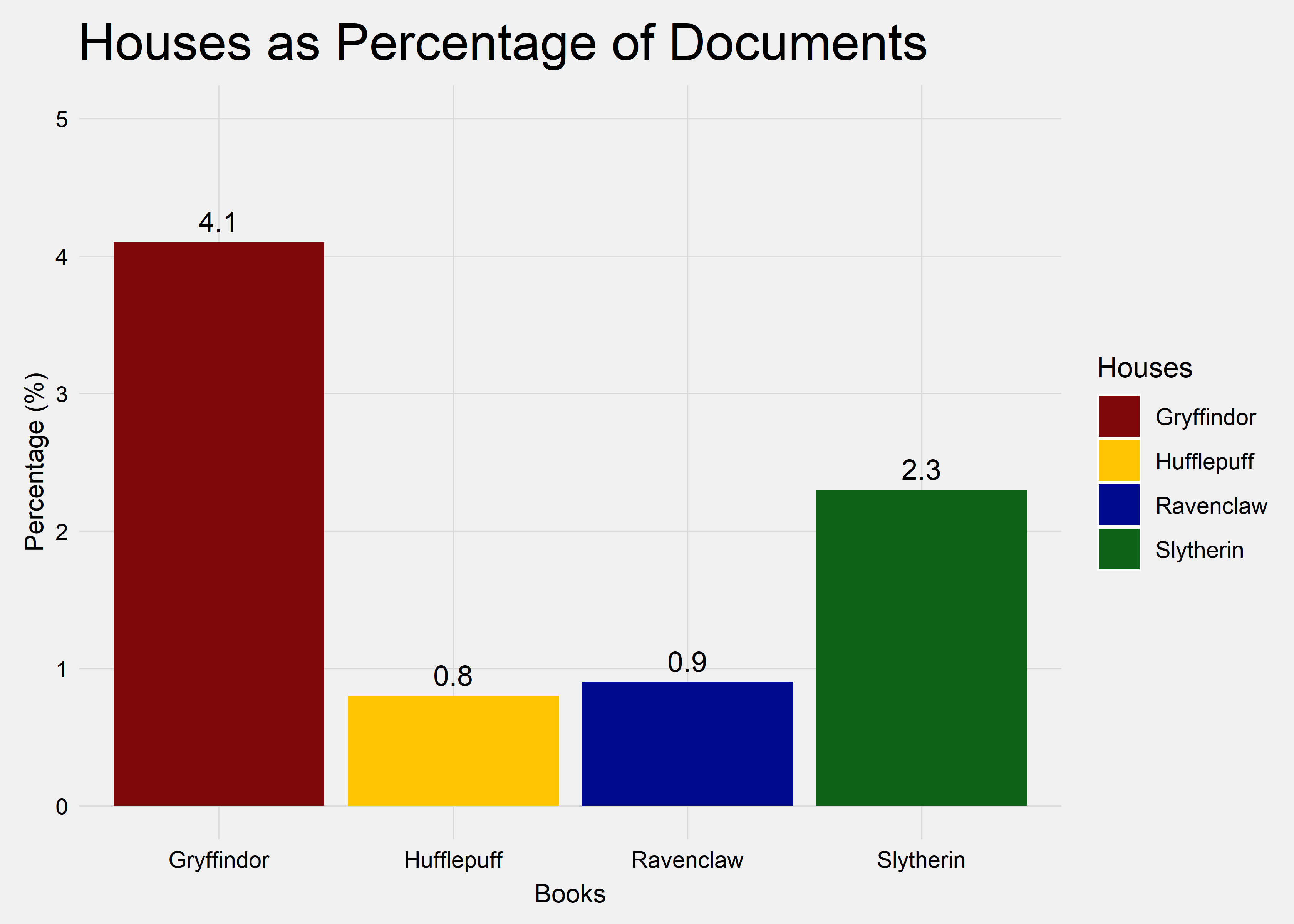

Percentage of Documents

It seemed that the houses were rarely mentioned. Gryffindor was easily mentioned the most, but was only mentioned in roughly 4% of the text. Slytherin was mentioned in a little over 2% of the text. Poor Hufflepuff and Ravenclaw were mentioned less than 1% of the text.

docs_prop <- tibble(Percentage = c(round(length(gryffindor_ID)/nrow(para3) * 100,

1), round(length(slytherin_ID)/nrow(para3) * 100, 1), round(length(ravenclaw_ID)/nrow(para3) *

100, 1), round(length(hufflepuff_ID)/nrow(para3) * 100, 1)), Houses = c("Gryffindor",

"Slytherin", "Ravenclaw", "Hufflepuff"))

colors <- c(Gryffindor = "#7F0909", Slytherin = "#0D6217", Ravenclaw = "#000A90",

Hufflepuff = "#FFC500")

ggplot(docs_prop, mapping = aes(x = Houses, y = Percentage, fill = Houses, label = Percentage)) +

geom_col() + geom_text(aes(label = Percentage), vjust = -0.5) + ggtitle("Houses as Percentage of Documents") +

Grph_theme() + scale_fill_manual(values = colors) + theme(legend.position = "right") +

ylim(0, 5) + labs(x = "Books", y = "Percentage (%)", color = "Legend")

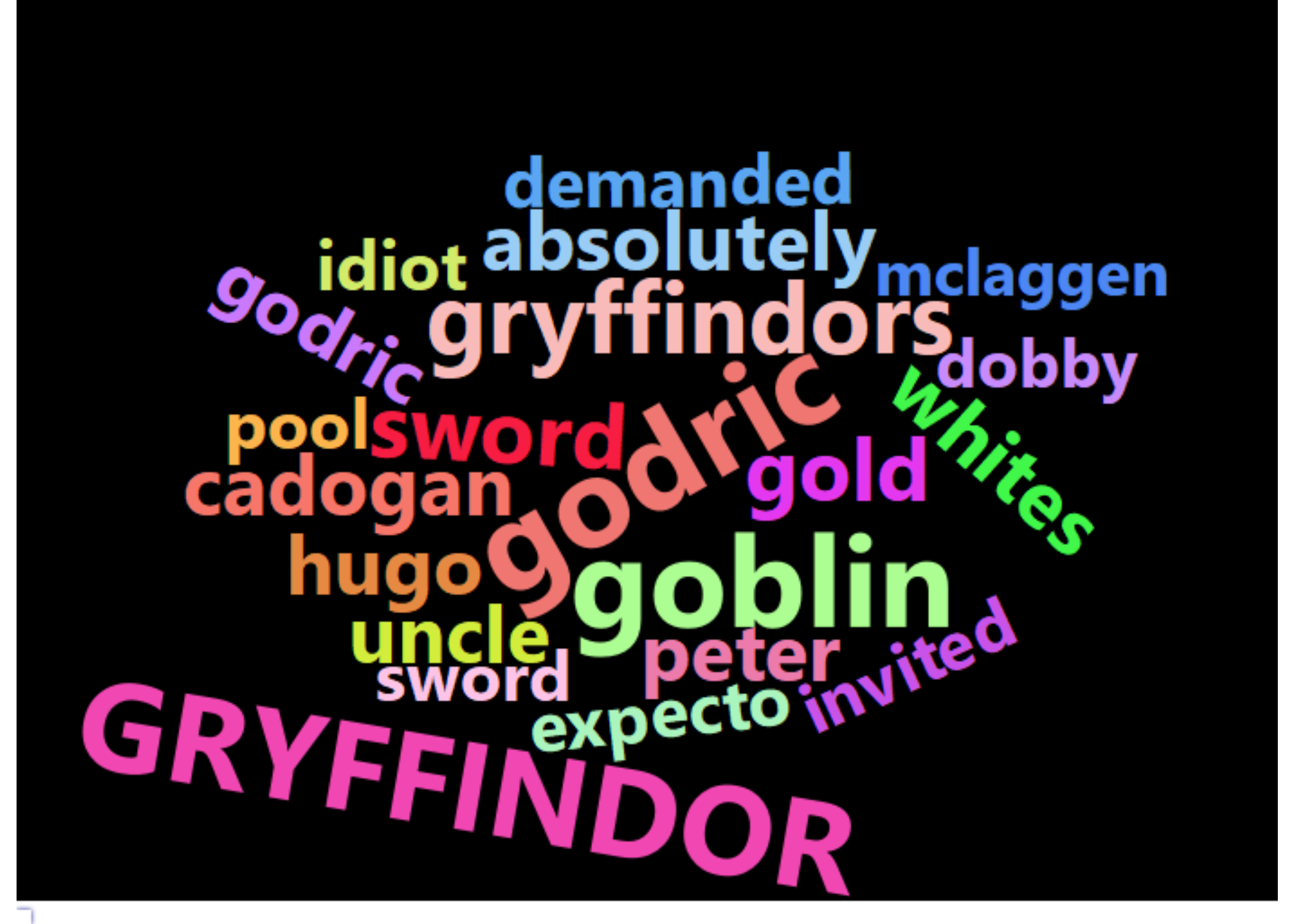

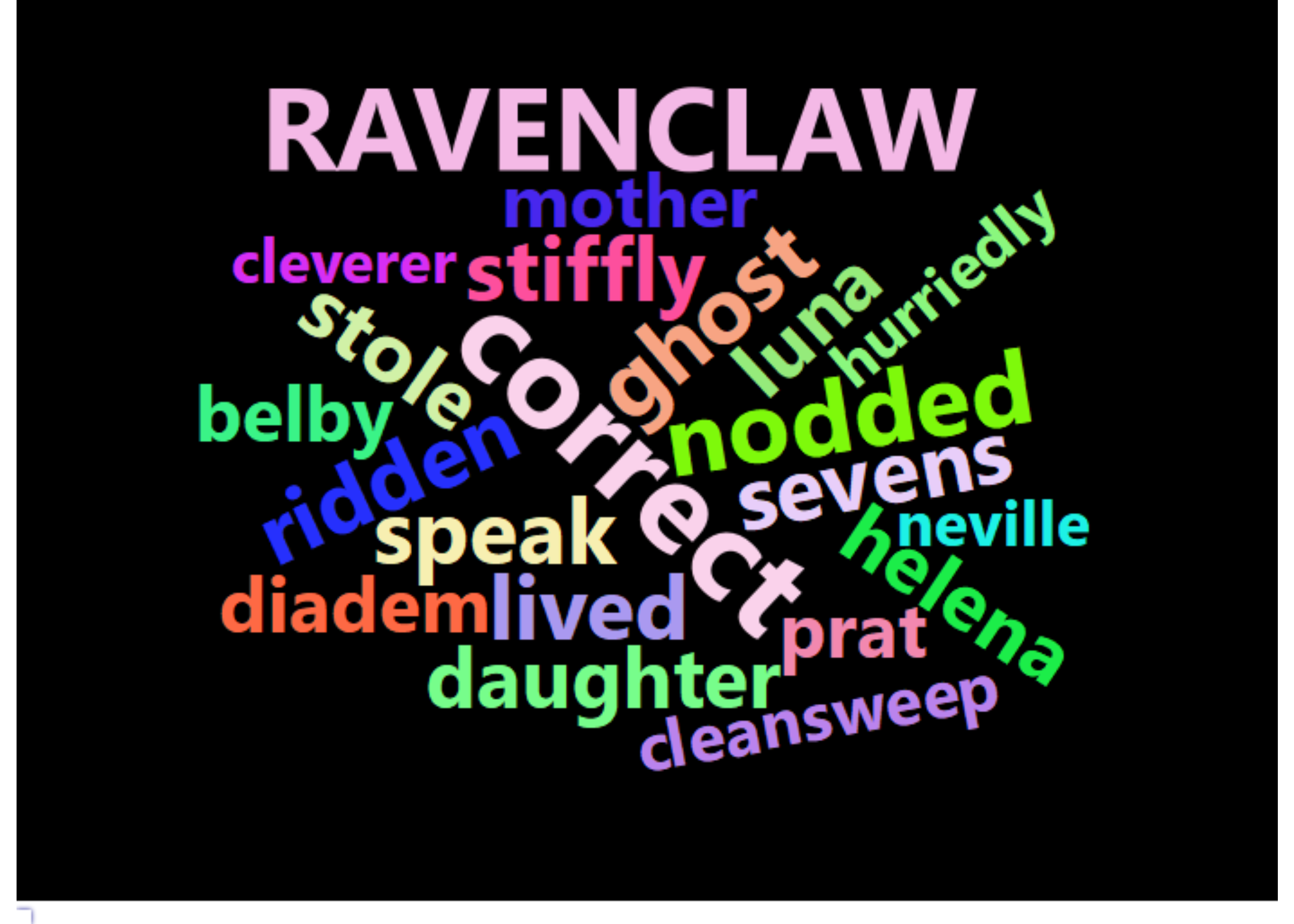

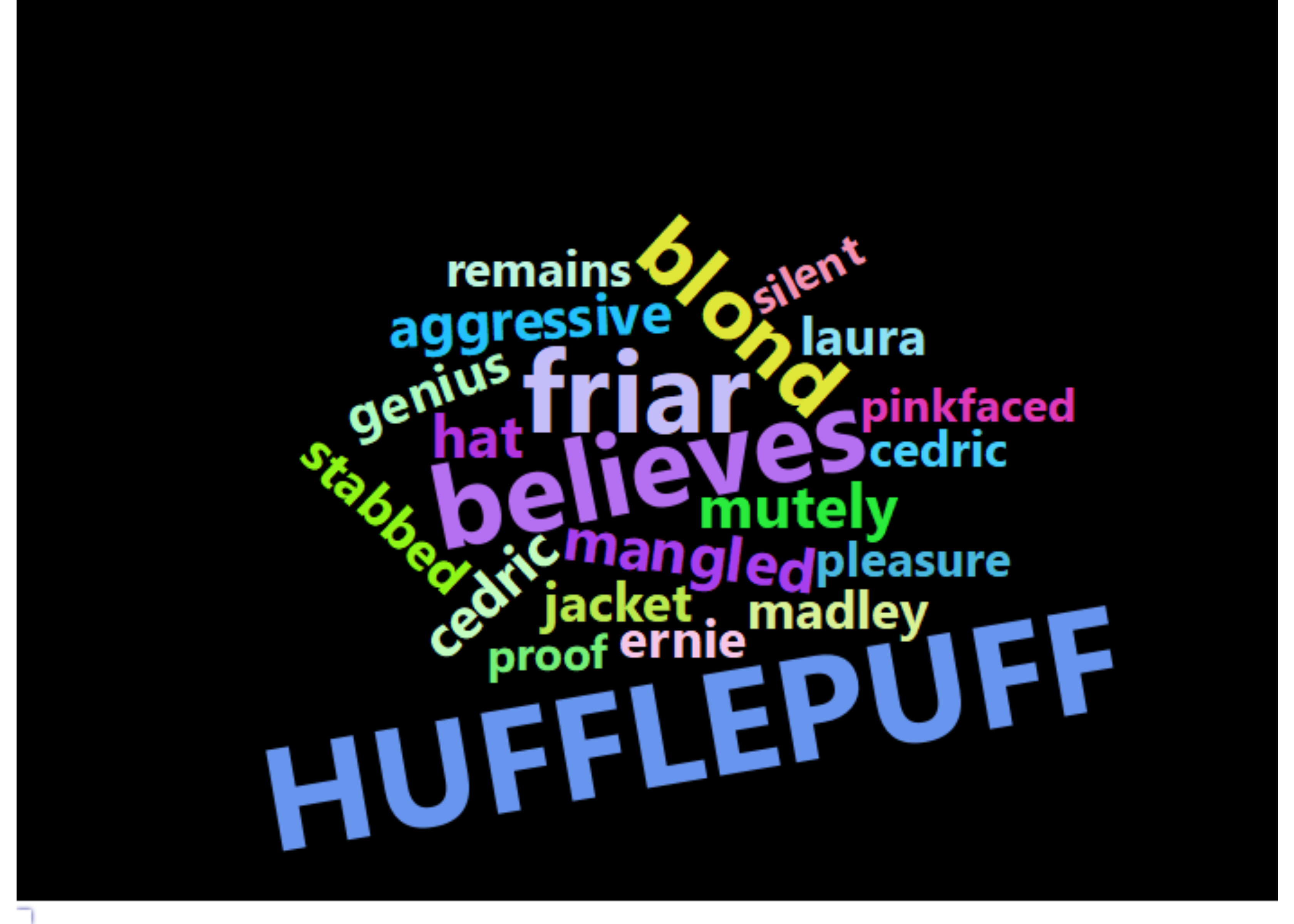

Descriptions of the Houses

For fun, here are the most specific words (using TF-IDF scores) to describe the four Houses.

set.seed(20)

para3$ID <- row.names(para3)

para3$CleanText <- para3$Text %>% basicclean() %>% removestopwords(remove = "said") %>%

removenonalpha()

# TFIDF Scores

TFIDF <- para3[houses_ID, ] %>% unnest_tokens(word, CleanText) %>% anti_join(get_stopwords()) %>%

count(ID, word, sort = T) %>% bind_tf_idf(word, ID, n) %>% arrange(desc(tf_idf))

Gryffindor_d <- TFIDF[TFIDF$ID %in% gryffindor_ID, ]

Slytherin_d <- TFIDF[TFIDF$ID %in% slytherin_ID, ]

Ravenclaw_d <- TFIDF[TFIDF$ID %in% ravenclaw_ID, ]

Hufflepuff_d <- TFIDF[TFIDF$ID %in% hufflepuff_ID, ]

# Gryffindor

Gryffindor <- Gryffindor_d %>% anti_join(Slytherin_d) %>% anti_join(Ravenclaw_d) %>%

anti_join(Hufflepuff_d)

cloud <- data.frame(words = rbind(Gryffindor[1:20, "word"], "GRYFFINDOR"), weight = rbind(Gryffindor[1:20,

"tf_idf"], 0.8))

wc_1 <- wordcloud2(cloud, size = 0.4, color = "random-light", backgroundColor = "black")

# Slytherin

Slytherin <- Slytherin_d %>% anti_join(Gryffindor_d) %>% anti_join(Ravenclaw_d) %>%

anti_join(Hufflepuff_d)

cloud <- data.frame(words = rbind(Slytherin[1:20, "word"], "SLYTHERIN"), weight = rbind(Slytherin[1:20,

"tf_idf"], 0.8))

wc_2 <- wordcloud2(cloud, size = 0.5, color = "random-light", backgroundColor = "black")

# Ravenclaw

Ravenclaw <- Ravenclaw_d %>% anti_join(Slytherin_d) %>% anti_join(Gryffindor_d) %>%

anti_join(Hufflepuff_d)

cloud <- data.frame(words = rbind(Ravenclaw[1:20, "word"], "RAVENCLAW"), weight = rbind(Ravenclaw[1:20,

"tf_idf"], 0.8))

wc_3 <- wordcloud2(cloud, size = 0.4, color = "random-light", backgroundColor = "black")

# Hufflepuff

Hufflepuff <- Hufflepuff_d %>% anti_join(Slytherin_d) %>% anti_join(Ravenclaw_d) %>%

anti_join(Gryffindor_d)

cloud <- data.frame(words = rbind(Hufflepuff[1:20, "word"], "HUFFLEPUFF"), weight = rbind(Hufflepuff[1:20,

"tf_idf"], 0.8))

wc_4 <- wordcloud2(cloud, size = 0.45, color = "random-light", backgroundColor = "black")

embed_htmlwidget(wc_1)

embed_htmlwidget(wc_2)

embed_htmlwidget(wc_3)

embed_htmlwidget(wc_4)

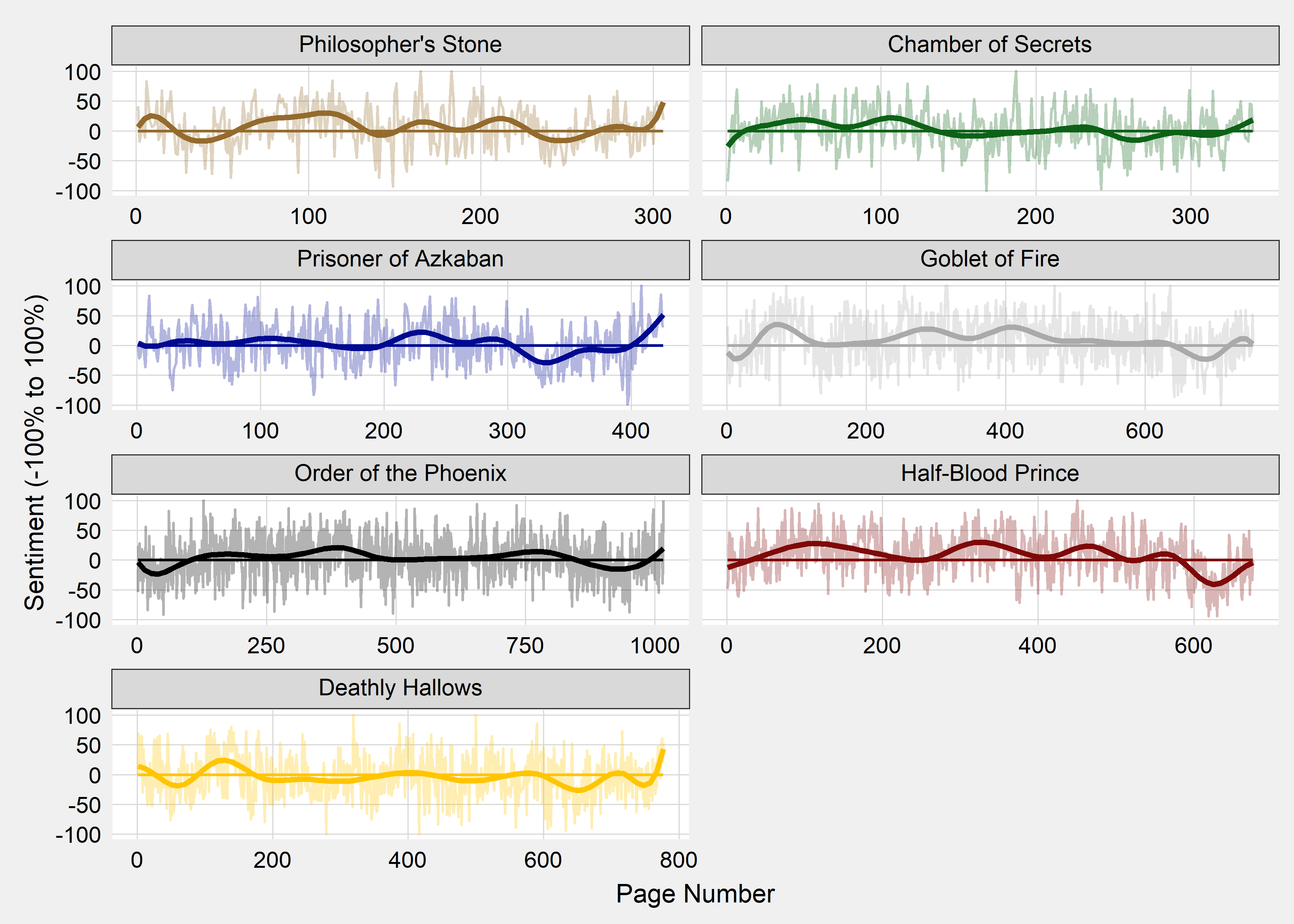

Emotional Rollercoaster

At the beginning of this document, I defined page the level as containing 250 words. I used the page level to observe how sentiment changed over the pages of each book.

Sentiment Levels

# Sentiment Scores

average <- function(x) {

pos <- sum(x[x > 0], na.rm = T)

neg <- sum(x[x < 0], na.rm = T) %>% abs()

neu <- length(x[x == 0])

bal <- ((pos - neg)/(pos + neg)) * 100

y <- ifelse(is.nan(bal), 0, bal %>% as.numeric())

return(y)

}

CleanText <- basicclean(pages$Text)

pages$Score <- sapply(CleanText, function(x) getSentiment(x, dictionary = "vader",

score.type = average))

pages$Cross <- NA

pages$Cross <- ifelse(pages$Score < 0, "Negative", pages$Cross)

pages$Cross <- ifelse(pages$Score > 0, "Positive", pages$Cross)

pages$Cross <- ifelse(pages$Score == 0, "Neutral", pages$Cross)

pasteNQ("Sentiment in Pages (%)")

propTab(pages$Cross)[1] Sentiment in Pages (%)

Negative Neutral Positive

46 0 54 Sentiment by Page

How does sentiment usually change over the course of one school year?

Finally, we can view sentiment per page to see the rollercoaster of emotions throughout the books. The main takeaways that I saw are:

- Prisoner of Azkaban (Book 3) has a more negative final third than I remember

- Goblet of Fire (Book 4) has a very happy middle, when Harry was focusing on the Tri-Wizard tournament and the Yule Ball

- Despite containing a lot of Voldmort’s background, Half-Blood Prince (Book 6) was surprisingly positive until the end

- Deathly Hallows (Book 7) was mostly negative throughout

- And the most obvious trend has to be that the end of each book was negative until the final chapter, which always seems to end on a positive note - whether the heroes were victories or Harry’s friends were providing him some support over a more difficult ending

# Grouping by pages for the x-axis

pages %>% group_by(Book) %>% mutate(Paragraph = row_number()) %>% ggplot(aes(Page,

Score, color = Book)) + Grph_theme() + theme(panel.background = element_rect(fill = "#FFFFFF",

color = "#FFFFFF")) + geom_line(alpha = 0.3, show.legend = FALSE) + geom_smooth(method = lm,

formula = y ~ splines::bs(x, 15), se = F, show.legend = FALSE) + geom_line(y = 0) +

scale_color_manual(values = c("#946B2D", "#0D6217", "#000A90", "#AAAAAA", "#000000",

"#7F0909", "#FFC500")) + ylim(-100, 100) + labs(x = "Page Number", y = "Sentiment (-100% to 100%)") +

facet_wrap(~Book, ncol = 2, scales = "free_x")

Next Steps

This was an analysis of the series’ tone. Previous analysis looked more broadly at the vocab, word distributions, and major themes/settings. Subsequent analysis will model comparisons of the books to the films (upcoming).